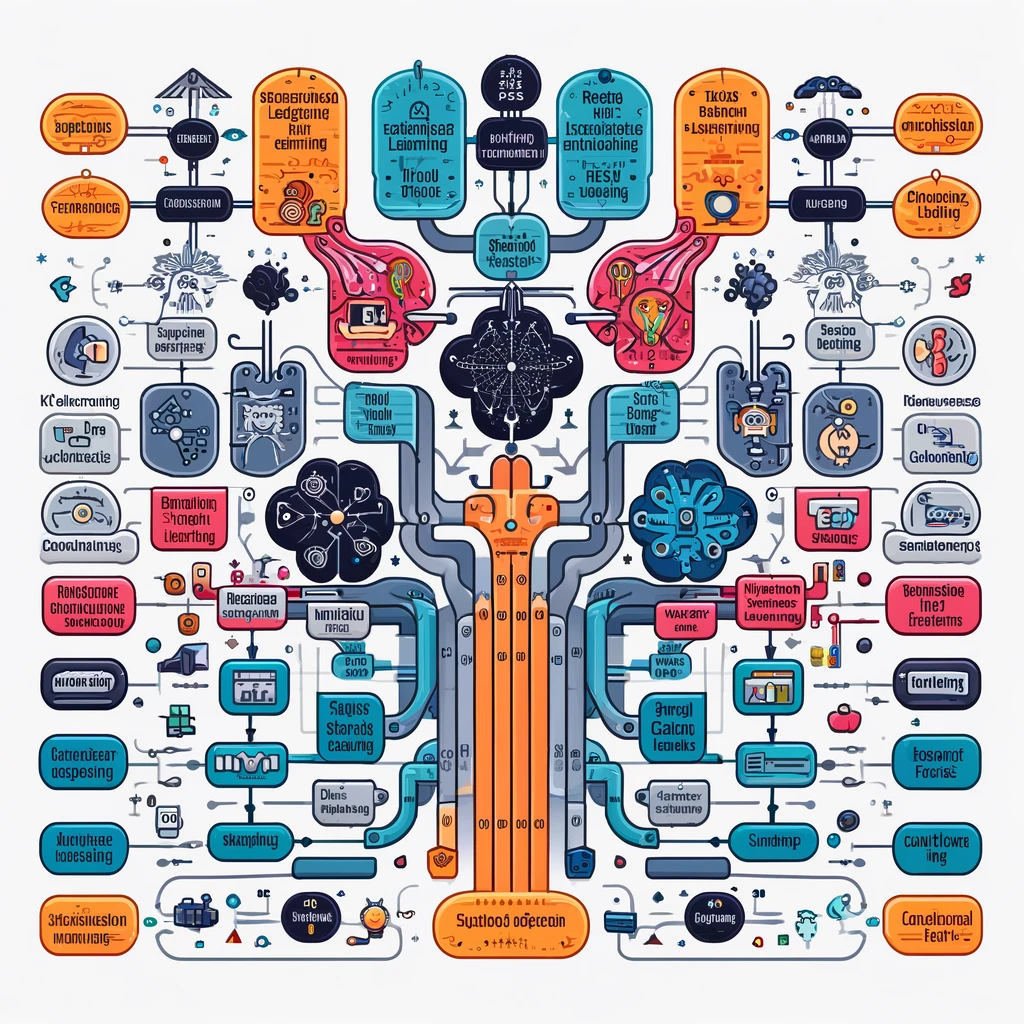

Supervised machine learning algorithms form the backbone of data-driven decision-making in various industries. Whether you’re working on predicting continuous outcomes or classifying data into distinct categories, supervised learning offers powerful tools for solving these problems. In this guide, we’ll walk through the most popular supervised machine learning algorithms, highlighting their uses, advantages, and key concepts.

What is Supervised Machine Learning?

Supervised machine learning involves training a model on a labeled dataset. This means the data includes both input features and the corresponding correct output. The model learns from this data to make predictions or decisions when new, unseen data is introduced.

There are two main types of tasks in supervised learning:

- Regression: Predicting continuous values (e.g., price of a house).

- Classification: Categorizing data into discrete labels (e.g., spam vs. not spam).

Now, let’s dive into the top supervised machine learning algorithms.

1. Linear Regression

Type: Regression

Use Case: Predicting continuous values.

Linear regression is one of the simplest machine learning algorithms. It assumes a linear relationship between the input variables (features) and the target variable. This algorithm is commonly used for tasks like predicting house prices, sales forecasts, and more.

Key Advantages:

- Easy to interpret.

- Fast to train and implement.

- Works well for problems with a linear relationship between variables.

2. Logistic Regression

Type: Classification

Use Case: Binary or multi-class classification.

Despite its name, logistic regression is used for classification problems. It estimates probabilities using the logistic function and is effective for tasks like email spam detection, medical diagnosis, and customer churn prediction.

Key Advantages:

- Good for binary classification tasks.

- Easy to implement and interpret.

- Efficient and requires fewer resources.

3. Support Vector Machines (SVM)

Type: Classification & Regression

Use Case: Separating classes by a decision boundary.

Support Vector Machines work by finding the hyperplane that best separates the data into different classes. SVM is particularly useful for high-dimensional spaces and is often used in image classification and text categorization.

Key Advantages:

- Works well in high-dimensional spaces.

- Effective when the number of features is greater than the number of samples.

- Provides robust performance with clear margins between classes.

4. Decision Trees

Type: Classification & Regression

Use Case: Decision-making processes.

Decision trees split the data based on feature values to make predictions. They work well for both classification and regression tasks and are widely used in finance, healthcare, and marketing for decision-making processes.

Key Advantages:

- Easy to visualize and interpret.

- Handles both categorical and numerical data.

- Requires minimal data preparation.

5. Random Forest

Type: Classification & Regression

Use Case: Reducing overfitting with multiple trees.

Random Forest is an ensemble of decision trees. It combines the predictions of several trees to make a more robust and accurate model. It’s frequently used in financial modeling, recommendation systems, and customer segmentation.

Key Advantages:

- Reduces overfitting in decision trees.

- High accuracy and stable performance.

- Can handle missing values and maintain accuracy.

6. K-Nearest Neighbors (KNN)

Type: Classification & Regression

Use Case: Pattern recognition.

K-Nearest Neighbors is a simple algorithm that classifies a data point based on the majority class among its nearest neighbors. KNN is used in recommendation systems, image recognition, and customer behavior analysis.

Key Advantages:

- Simple and intuitive.

- No training phase, making it quick to implement.

- Effective for small datasets.

7. Naive Bayes

Type: Classification

Use Case: Text classification and sentiment analysis.

Naive Bayes is a probabilistic classifier based on Bayes’ theorem. Despite its simplicity, it performs remarkably well in tasks like spam filtering, sentiment analysis, and document classification.

Key Advantages:

- Fast and efficient.

- Works well with large datasets.

- Performs well with text data.

8. Gradient Boosting Machines (GBM)

Type: Classification & Regression

Use Case: Handling complex datasets.

Gradient Boosting Machines are a powerful ensemble technique that builds models sequentially, improving predictions at each step. GBMs are widely used in competitions and business applications like customer behavior prediction and fraud detection.

Key Advantages:

- High predictive accuracy.

- Handles both classification and regression tasks.

- Flexible with hyperparameter tuning.

9. AdaBoost (Adaptive Boosting)

Type: Classification & Regression

Use Case: Improving weak learners.

AdaBoost focuses on creating an ensemble of weak learners (like decision trees) and adjusting the weights of misclassified data points to improve accuracy. It’s effective in areas such as face detection and customer churn analysis.

Key Advantages:

- Improves the performance of weak learners.

- Robust against overfitting.

- Easy to implement.

10. XGBoost (Extreme Gradient Boosting)

Type: Classification & Regression

Use Case: Winning machine learning competitions.

XGBoost is a fast and efficient implementation of gradient boosting. It’s known for its speed and performance and is a favorite among data scientists for tasks like predictive modeling in e-commerce and finance.

Key Advantages:

- High efficiency and scalability.

- Robust to overfitting.

- Extensive use in data science competitions.

11. LightGBM

Type: Classification & Regression

Use Case: Large datasets and high-performance tasks.

LightGBM is another gradient boosting algorithm, optimized for performance and large datasets. It’s frequently used in industries like telecommunications, finance, and healthcare for real-time prediction systems.

Key Advantages:

- Fast training speed.

- Handles large datasets efficiently.

- Reduces memory usage.

12. CatBoost

Type: Classification & Regression

Use Case: Handling categorical data efficiently.

CatBoost is known for its ability to handle categorical features automatically, making it ideal for tasks like customer segmentation, fraud detection, and demand forecasting.

Key Advantages:

- Excellent performance with categorical data.

- Reduces overfitting.

- Works well with default parameters.

13. Artificial Neural Networks (ANN)

Type: Classification & Regression

Use Case: Solving complex, non-linear problems.

Artificial Neural Networks are inspired by the human brain and are highly effective for tasks that require learning from large datasets. They are used in deep learning for tasks such as image recognition, language processing, and recommendation systems.

Key Advantages:

- Powerful in solving complex problems.

- Can model non-linear relationships.

- Effective with large datasets.

14. Ridge and Lasso Regression

Type: Regression

Use Case: Reducing overfitting.

Ridge and Lasso are regularization techniques used with linear regression to reduce overfitting by penalizing large coefficients. They are commonly used in predictive modeling in finance and healthcare.

Key Advantages:

- Helps in reducing model complexity.

- Prevents overfitting.

- Effective in high-dimensional datasets.

15. Elastic Net

Type: Regression

Use Case: Combining the power of Ridge and Lasso.

Elastic Net combines Ridge and Lasso regression, balancing their benefits. It’s used in applications where there are multiple correlated variables, like in genomics and financial modeling.

Key Advantages:

- Balances the strengths of Ridge and Lasso.

- Prevents overfitting.

- Handles multicollinearity effectively.

Conclusion

Supervised machine learning algorithms are essential tools for extracting insights and making predictions from data. Whether you’re working on a simple regression problem or building a complex classification model, these algorithms can be tailored to suit your needs. As data continues to grow, mastering these supervised learning algorithms will be crucial in making data-driven decisions and staying ahead in a competitive landscape.

By understanding the strengths and applications of each algorithm, you can make informed choices on which to use in your projects. Stay tuned as we delve into more machine learning concepts in upcoming posts!

Nice blog here Also your site loads up fast What host are you using Can I get your affiliate link to your host I wish my web site loaded up as quickly as yours lol

I have been surfing online more than 3 hours today yet I never found any interesting article like yours It is pretty worth enough for me In my opinion if all web owners and bloggers made good content as you did the web will be much more useful than ever before

Simply wish to say your article is as amazing The clearness in your post is just nice and i could assume youre an expert on this subject Well with your permission let me to grab your feed to keep updated with forthcoming post Thanks a million and please carry on the gratifying work

Wonderful web site Lots of useful info here Im sending it to a few friends ans additionally sharing in delicious And obviously thanks to your effort

Thank you for the good writeup It in fact was a amusement account it Look advanced to far added agreeable from you However how could we communicate