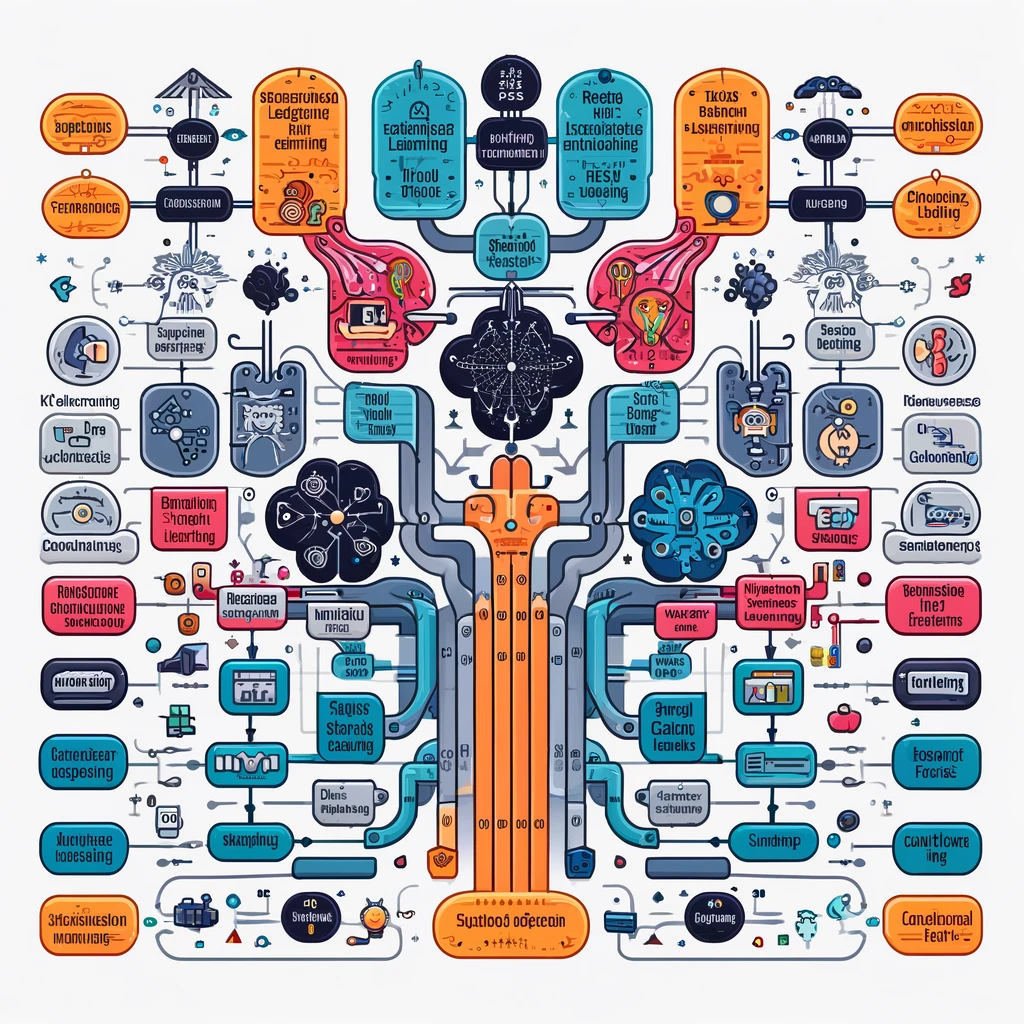

Here is a list of popular machine learning models, categorized by their types:

1. Supervised Learning Models

These models are trained on labeled data, meaning the output is known.

- Linear Regression: Used for predicting a continuous value (e.g., house prices).

- Logistic Regression: Used for binary classification tasks (e.g., spam or not spam).

- Support Vector Machines (SVM): Used for classification and regression, finds the hyperplane that best separates classes.

- Decision Trees: A tree-like structure for classification and regression tasks.

- Random Forest: An ensemble of decision trees, used for classification and regression.

- K-Nearest Neighbors (KNN): Used for classification and regression by finding the nearest data points.

- Naive Bayes: A probabilistic model used for classification based on Bayes’ Theorem.

- Gradient Boosting Machines (GBM): An ensemble of decision trees that boosts weak learners.

- XGBoost: An optimized version of GBM, highly efficient for large datasets.

- AdaBoost: Another boosting method that focuses on improving weak learners.

- Ridge Regression: A linear model with L2 regularization.

- Lasso Regression: A linear model with L1 regularization.

2. Unsupervised Learning Models

These models work on unlabeled data to find hidden patterns.

- K-Means Clustering: Divides data into k distinct clusters based on feature similarity.

- Hierarchical Clustering: Builds a hierarchy of clusters, useful for tree-like clustering.

- DBSCAN: A density-based clustering method that finds clusters in data based on density.

- Principal Component Analysis (PCA): Reduces the dimensionality of data by projecting it onto a lower-dimensional space.

- Independent Component Analysis (ICA): Similar to PCA, but focuses on making the components statistically independent.

- t-SNE (t-Distributed Stochastic Neighbor Embedding): Used for visualizing high-dimensional data in 2D or 3D space.

- Autoencoders: Neural networks used for unsupervised learning, often for feature extraction or dimensionality reduction.

3. Semi-Supervised Learning Models

These models use a small amount of labeled data with a large amount of unlabeled data.

- Self-training: A model initially trained on labeled data is used to label the unlabeled data.

- Co-training: Two models train on separate features of the same dataset and help label each other’s unlabeled data.

4. Reinforcement Learning Models

These models learn by interacting with an environment and receiving feedback (rewards).

- Q-Learning: A value-based reinforcement learning algorithm that learns the best action for each state.

- Deep Q-Networks (DQN): Combines Q-learning with deep neural networks.

- Proximal Policy Optimization (PPO): A policy gradient method for reinforcement learning, used in continuous action spaces.

- Actor-Critic Methods: Combines value-based and policy-based methods for more efficient learning.

5. Ensemble Learning Models

These models combine multiple models to improve performance.

- Bagging: Combines predictions of multiple models, such as in Random Forest.

- Boosting: Sequentially builds models to correct the errors of the previous models (e.g., AdaBoost, XGBoost).

- Stacking: Combines the outputs of multiple models into a meta-model for better predictions.

6. Neural Network Models

These are deep learning models often used for complex tasks like image recognition or natural language processing.

- Artificial Neural Networks (ANN): A basic neural network used for classification and regression.

- Convolutional Neural Networks (CNN): Specialized for image and video data.

- Recurrent Neural Networks (RNN): Used for sequential data like time series or text.

- Long Short-Term Memory Networks (LSTM): A type of RNN designed to learn long-term dependencies.

- Generative Adversarial Networks (GANs): Used to generate data similar to a given dataset (e.g., images).

- Transformer Models: Highly used in natural language processing (e.g., GPT, BERT).

7. Dimensionality Reduction Models

These models reduce the number of variables in data without losing essential information.

- Principal Component Analysis (PCA): Projects data onto a lower-dimensional space.

- Linear Discriminant Analysis (LDA): Similar to PCA but with a focus on maximizing class separation.

- Factor Analysis: A statistical method for uncovering latent variables in data.

These are some of the most commonly used machine learning models across different tasks like classification, regression, clustering, and more.

Thanks I have recently been looking for info about this subject for a while and yours is the greatest I have discovered so far However what in regards to the bottom line Are you certain in regards to the supply

What i do not understood is in truth how you are not actually a lot more smartlyliked than you may be now You are very intelligent You realize therefore significantly in the case of this topic produced me individually imagine it from numerous numerous angles Its like men and women dont seem to be fascinated until it is one thing to do with Woman gaga Your own stuffs nice All the time care for it up